When discussing cloud storage costs, network expenses are often a significant hidden factor. Data transfer between storage and other services can lead to unexpected charges, especially as usage scales. During the Build 2024 event, Microsoft silently published a small but important update to networking costs. This article aims to summarize how networking costs might impact cloud storage in Azure and what to consider during design from a FinOps perspective.

Any costs in this article roughly estimate costs related to network data transfer only and don’t reflect any individual customer agreements. For precise estimates, I recommend checking the latest official pricing, contacting your Microsoft representative, or evaluating a limited PoC to prevent any incurred costs.

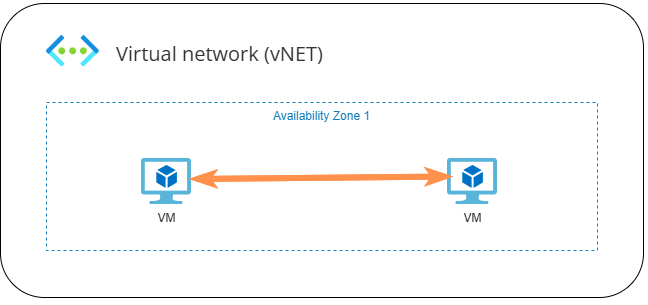

Single-AZ transfers within a single vNET

Any data transfers between VMs within a single vNET in a single availability zone (AZ) have been free, and there are no announced plans to start charging for them.

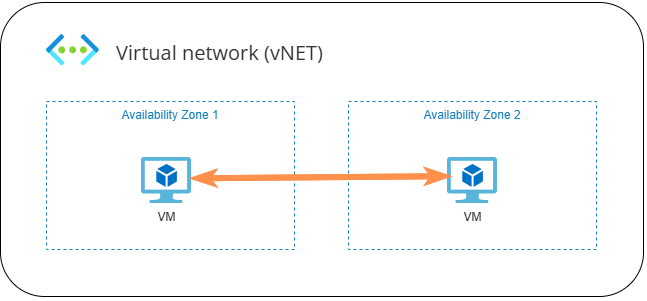

Cross-AZ transfers within a single vNET

To improve your workload resiliency, you might want to scale out underlying resources across multiple availability zones. This architecture reduces the probability of downtime, so when one AZ experiences an outage, the other remains operational.

A bit of history: In 2021, Microsoft announced plans to start billing for data transfers across availability zones (AZs) in the future. Microsoft noted that they would announce the launch date 3 months in advance. This plan remained in place for the past 3 years, until April 2024, when Microsoft began to implement these charges. Additionally, the Microsoft Learn/Azure pricing documentation has been inconsistent, and only the page about Bandwidth pricing mentioned this cost.

With this cost in place, any data transfer between two AZs, even within a single vNET in the same region, is charged per transferred GB.

At end of May, during the Microsoft Build conference, Microsoft has announced in a small blog post that they are removing the cost, and so far, it appears they do not plan to reintroduce it.

Impact to Cloud Storage

When I talk about Cloud Storage in this context, I am referring to TBs of data that are frequently accessed by an application. Due to missing important storage features (thin-provisioning, data deduplication, snapshots, etc.) on native disks services, this type of storage typically leverages a smarter, SAN solution, such as Pure Cloud Block Store™ (CBS).

SAN solutions are typically AZ-specific, meaning the underlying resources are deployed within a single AZ. This architecture reduces storage latency and improves performance.

For workloads deployed in a single AZ, the best practice is to co-locate a SAN in the same AZ as the workload. Deploying into a different AZ might lead to slower performance and violates the main concept of isolated AZs for resiliency. It increases the probability of downtime because if either the application’s AZ or the storage’s AZ experiences an outage, it leads to a solution downtime. Additionally, such an architecture would also bring additional charges to your invoice due to cross-AZ communication charges.

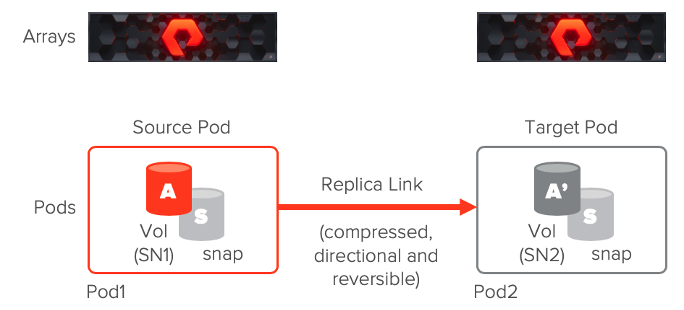

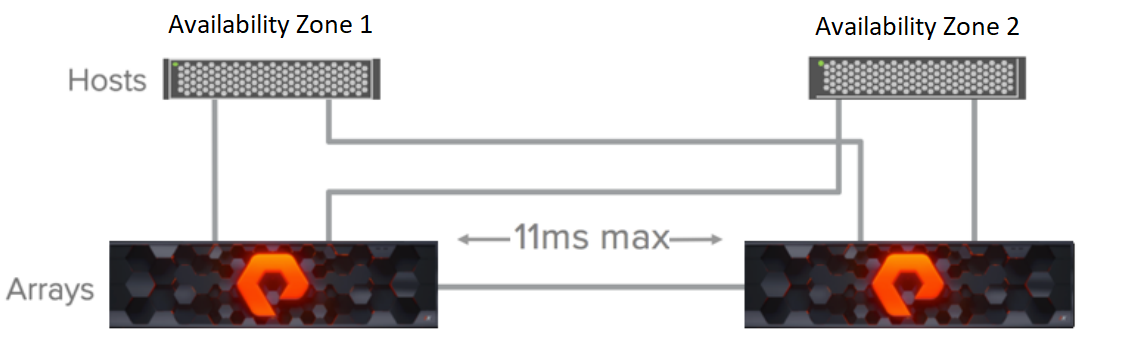

For more important workloads deployed across multiple AZs, you may deploy 2 CBS arrays (into different AZs within same vNET) and leverage a replication between them.

ActiveDR

One of the replication types available in CBS is an asynchronous near-zero RPO, called ActiveDR.

A big advantage of this replication is that only deduplicated and compressed data are transferred, not the raw data. After Microsoft removed the cross-AZ cost, this has no impact on the bill anymore, but it significantly reduces the time required to transfer the “same amount” of data. This will have a significant impact in the next FinOps tip below.

ActiveCluster

Another type of replication between two CBS instances which can be used here is called ActiveCluster™. This setup provides active-active synchronous replication with zero RPO and RTO, where each CBS instance primary serves applications within its respective AZ.

Please note, that in ActiveCluster replication the benefit for replicating only deduplicated and compressed data is not applicable, since every write needs to be done on both devices synchronously.

Multiple vNETs, Single/Cross-AZ Communication

All the scenarios above discussed having all resources within a single vNET.

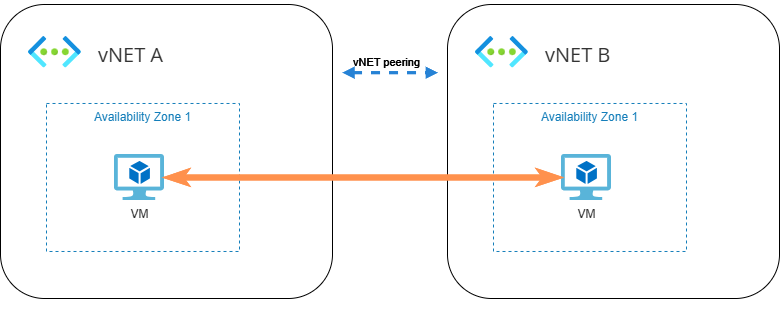

When a resource is located in a different vNET and accessed via vNET peering/NVA (regardless of whether it’s in the same or a different AZ), another networking cost applies—for data transferred via vNET peering. The vNET peering service itself is free, but ingress and egress traffic is charged at both ends of the peered networks.

VNET Peering within the same region:

| Transfer | Price |

|---|---|

| Inbound data | $0.01 per GB |

| Outbound data | $0.01 per GB |

vNET peering within the same region is charged at $0.02 per GB ($0.01 for egress from the first vNET and $0.01 for ingress to the second vNET), regardless of whether the data physically stays within the same AZ.

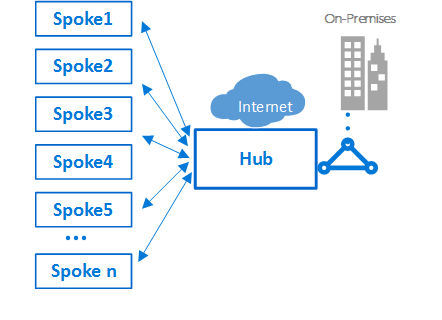

Following best practices for creating an Azure landing zone in an enterprise environment typically results in a more complex network topology. The most common is the Hub and Spoke topology, where multiple Spoke vNETs (containing various workloads) are connected to a single Hub vNET, which provides common connectivity to on-premises, security appliances, and more, via vNET peering.

With this topology, try to limit the number of hops as much as possible—each vNET peering in the path incurs charges for both egress and ingress.

For communication between two Spoke vNETs (e.g. a VM consuming storage from SAN in another vNET), consider co-locating these resources into a single vNET or creating a direct vNET peering between these vNETs.

sequenceDiagram

participant vNET 1

participant Hub vNET

participant vNET 2

vNET 1->> Hub vNET: egress from vNET 1 ($0.01) + ingress to Hub vNET ($0.01) = $0.02

Hub vNET ->> vNET 2: egress from Hub vNET ($0.01) + ingress to vNET 2 ($0.01) = $0.02

In total this architecture costs $0.04 per GB.

Model scenario no.1 - transferring 100 TB data, same-region, cross-AZ:

| Method | Total |

|---|---|

| Within single vNET | |

| Hub&spoke (routed via Hub, 2x vNET peerings) | $4,000 |

| Hub&spoke (direct spoke-spoke vNET peering) | $2,000 |

Model scenario no.2 - transferring 100 TB data, same-region single-AZ:

| Method | Total |

|---|---|

| Within single vNET | $0 |

| Hub&spoke (routed via Hub, 2x vNET peerings) | $4,000 |

| Hub&spoke (direct spoke-spoke vNET peering) | $2,000 |

AWS difference

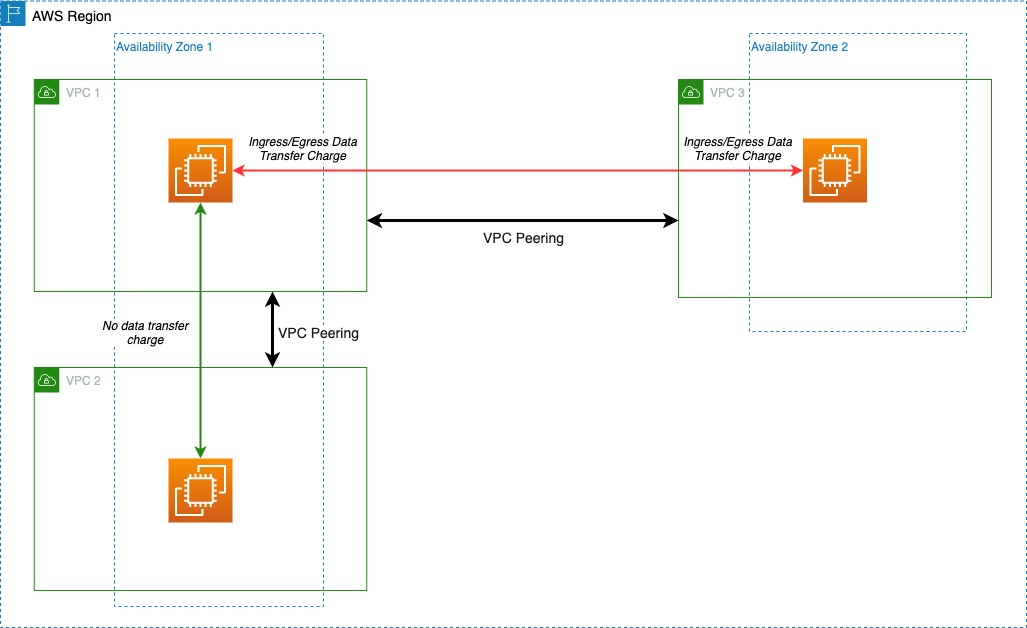

There is a significant difference in networking costs between Azure and AWS. AWS does not charge for data transfer between peered VPCs if the source and destination remain within the same availability zone. All variants in Scenario no.2 would not be charged.

Private Link

At the end of this article, I would like to discuss the option of exposing cloud storage via Private Link. Despite its various benefits, Private Endpoints incur additional costs, priced at $0.01 per hour for each Private Endpoint. Additionally, there are costs associated with processing inbound and outbound data transferred via Private Endpoints, and the data transfer charges mentioned earlier still apply. For large data transfers it might be a significant hidden cost.